Smart Artificial Hand Developed for Amputees; Combines User and Robotics Control.Scientists; they have successfully tested the new neuroprosthetic technology that combines robotic control, which has opened up new ways in the interdisciplinary field of shared control for neuroprosthetic technologies.

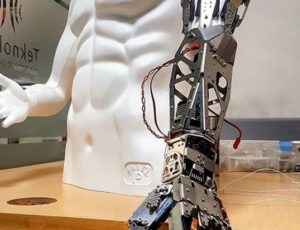

EPFL scientists are developing new approaches that combine individual finger control and automation for better control of robotic hands, better grip and manipulation (especially in amputees). This interdisciplinary study between neuro-engineering and robotics; three amputees and seven healthy subjects were tested successfully.

Technology combines two concepts from two different fields. Co-administration of the two had never been done for robotic hand control and only contributed to the emergence of joint control in neuroprotetics.

This study in neuro-engineering; the prosthesis which has never been done before, involves deciphering the finger movement based on the muscle activity in the amputated leg for individual finger control of the hand. Another; from robotics, it helps the robotic hand to hold objects and stay in touch with them for a firm grip.

EPFL Learning Algorithms and Systems Laboratory Director Aude Billard: When you hold an object in your hand, and then that object starts to slide, you only have a few milliseconds to react. The robotic hand is capable of reacting in such a situation over a period of 400 milliseconds. Equipped with pressure sensors throughout all fingers, the brain; it can actually react and balance the object before it can detect the shift.

How Does Shared Control Work?

Before the algorithm; learns how to solve the user’s intention and converts it into the finger movement of the prosthetic hand. Meanwhile, amputated; the machine must make a series of hand gestures to improve the algorithm that uses learning. Sensors placed on the amputated leg; it detects muscle activity and the algorithm learns which hand movements correspond to which muscle activity patterns. When the user’s intended finger movements are understood, this information can be used to control the fingers of the prosthetic hand.

Researcher Katie Zhuang: Because muscle signals can be noisy, we need a machine learning algorithm that extracts meaningful activity from these muscles and converts them into movements.

Then, scientists will use this algorithm; robotic automation has been developed to be activated when the user tries to hold an object. Algorithm, prosthesis handled; when an object comes into contact with the sensors on the surface of the prosthetic hand. This automatic clutch; This is an adaptation from a previous study for robotic arms designed to measure the shape of objects and to grasp on the basis of tactile information, without the aid of visual signals.

Many difficulties remain to improve the algorithm before the algorithm is implemented by a commercially available prosthesis. For now, the algorithm; it is still being tested on a robot provided by an external party.

Vest Our common approach to controlling robotic hands can be used in a variety of neuroprosthetic applications, such as brain-machine interfaces, artır said Silvestro Micera, Professor of Bioelectronics at Scuola Superiore Sant’Anna, president of the Bertarelli Foundation in EPFL Neuro-Engineering.

If you interested in it,there is a video that makes you more understand.