Researchers working at OpenAI played a hide-and-seek game of 500 million times mixed with artificial intelligence to develop more complex artificial intelligence. Artificial intelligence developed to develop complex behaviors after 500 million studies.

At the beginning of life on Earth, organisms had little or no coordination skills. Billions of years of evolution through competition and natural selection have led to today’s life forms and human intelligence.

Researchers at the OpenAI, a nonprofit artificial intelligence laboratory in San Francisco, propose a hypothesis based on the evolution of organisms: if you can simulate competition similar to the one in the world, can a more sophisticated artificial intelligence develop?

The OpenAI work was based on two ideas. These two ideas can be summarized in the form of a special machine learning technique that learns to achieve a result by experimenting with multiple algorithms based on competition or coordination to provoke behaviors resulting from multiple learning.

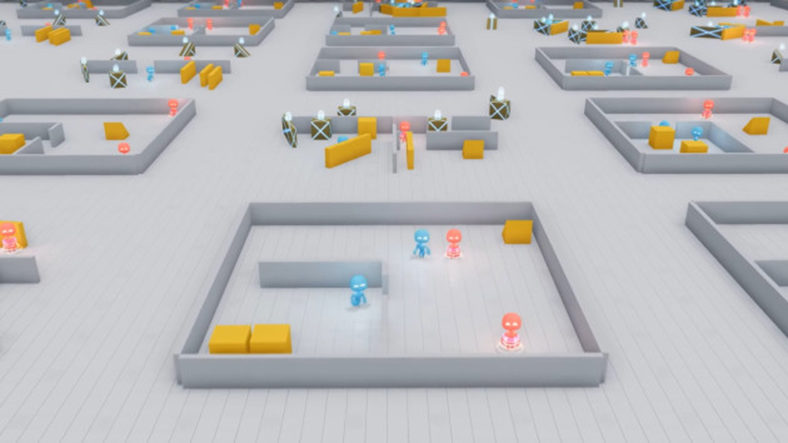

OpenAI published the first results of the study with an article published. Researchers at OpenAI played a simple hide-and-seek game millions of times to two rival teams of artificial intelligence tools. Artificial intelligence teams developed a complex hiding and searching strategy in the game of hide and seek.

Researchers at OpenAI scaled artificial intelligence techniques to see what features emerged as a result of the study.

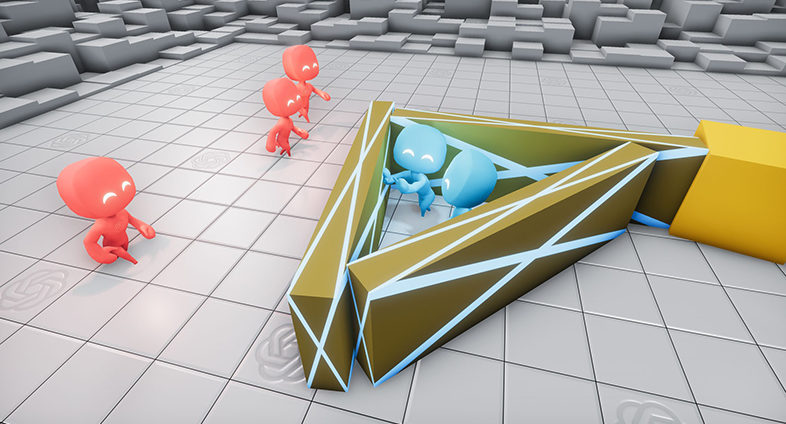

For the hide-and-seek game, which was repeated 500 million times, researchers designed a virtual environment consisting of blocks, ramps, mobile and immobile barricades. Artificial intelligence teams working in this area were designed into two separate groups: the hiders and the seekers. The hiders were rewarded or punished for failing in hiding. Seekers were either punished or rewarded for their success in locating the custodians. During the hide-and-seek game, the researchers also set a time limit.

During the game played millions of times, researchers developed several strategies and counter-strategies. In the early stages of the hide-and-seek game, artificial intelligence teams used vulgar strategies. But after more than 25 million repetitive games, more sophisticated strategies were developed.

After 25 million games, the hiders learned to build a castle from blocks around them, barricade boxes and lock boxes and roads. The artificial intelligence teams who searched this way could not find the hiding. At the same time, the hideouts began to accelerate the process of building the castle over time and learn strategies to cross each other’s objects.

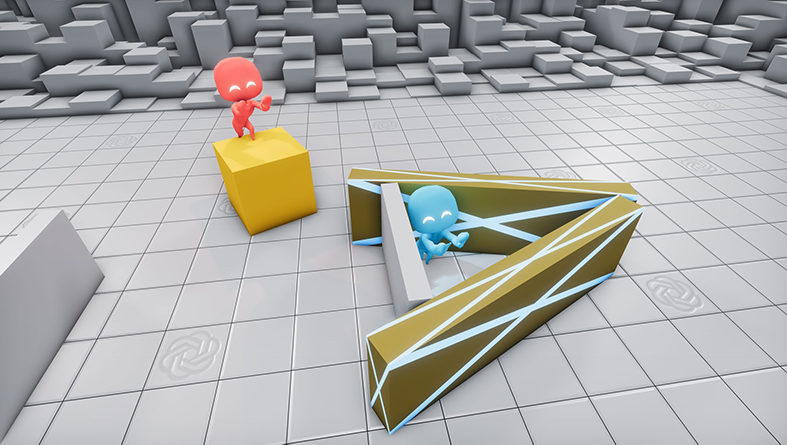

After 75 million attempts, artificial intelligence in the seeker team developed a counter strategy against the hidden. After 75 million attempts, the callers learned to climb the fortress wall by carrying ramps in the area. After 10 million trials, they learned to position the ramps so that the castle could not be built before the castle was built.

After 85 million trials, the researchers thought that the final stage of the strategies was in progress. However, two more strategies emerged after 380 million games.

This time, the callers learned to enter the fortresses using a locked ramp to climb into an unlocked box. The registers learned to lock all ramps and boxes before building the castle.

We didn’t tell those who were hiding or looking during the experiment to stand near a box or contact them, Bow says Bowen Baker, a researcher. However, they developed new forms of behavior by adapting to the other team through multilateral competition. ”

OpenAI’s work in the field of artificial intelligence is unique. The OpenAI lab continues to invest in developing new techniques. But they are far ahead of other laboratories, especially in developing existing techniques. A wide range of computational resources that OpenAl has essentially revealed.

The ongoing work at OpenAI confirms by measuring and testing the limits of existing technologies. The research team at OpenAI plans to continue this research. According to the researchers, during the first experiment, they were no closer to reaching the limits of computing resources.

According to the researchers of OpenAI, it is possible to solve existing problems by learning artificial intelligence.